Perceptions of ML in Selection

May 8, 2025 in Conferences, Publications, & Awards, Selection & Assessment, Uncategorized

By Chi-Leigh Warren

User Perceptions of Machine Learning in Selection

Testing the accuracy of artificial intelligence (AI) within a controlled research study differs greatly from real world applications, as the latter potentially have serious consequences, legal considerations, and ethical implications concerning personnel decisions. Increased interest in AI and machine learning (ML) within the field of I/O psychology comes with many novel data analysis and computing techniques for our field that were not possible nor accessible before. However, with great power comes great responsibility.

Despite a great boom in research on ML and how it could support personnel decisions, the ability to apply ML in practice is somewhat limited. Why? ML algorithms and methods are evolving very quickly, but employment law is not.

Selection, specifically, is wrought with many legal considerations and red tape, and understandably, organizations are hesitant to use ML in selection contexts at this time to avoid risk of legal scrutiny in hiring practices. Because there are few laws that regulate how ML is used in hiring, many organizations are hesitant to implement ML in selection to avoid potential litigation after new laws are passed that conflict with previous methods as a violation of employment law.

Part of creating legal defensibility of ML methods in selection is to proactively consider the ethical implications of using ML to hire candidates. Currently, there is not enough consistency in the field to support a singular best practice for selection, creating more challenges for regulating ML use and understanding possible risks. How might we test how consistent ML is in selection? And how do people perceive the use of AI in selection? With a three-part study, we investigated how consistent ML methods were at making simulated hiring decisions, proposed a new integrated ML approach to reduce bias, and assessed how candidates and hiring managers perceived ML in selection and its methodological integrity.

Study 1: When comparing individual ML algorithms, how often do they agree when making simulated selection decisions?

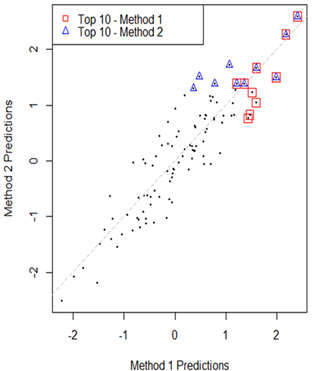

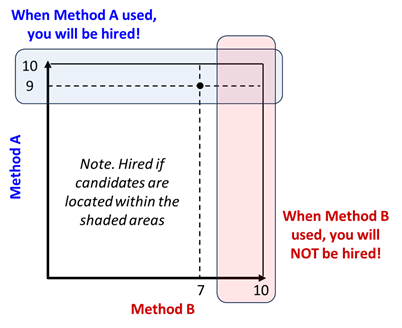

In part one of a recent study, we found that despite being highly correlated and fed the same data, different ML algorithms will often not agree on which candidates to hire. This disagreement is troublesome when applied to selection, where these small discrepancies have huge impacts. For example, if Algorithm A were used to assess your applicant data, then you could be hired, but, if Algorithm B were used to analyze the same data, you may not get the job. Understandably, this is a legal minefield: applicants are upset, and the company is worried about potential lawsuits. Even more troubling, there is no consensus on any particular ML method that is superior to others, and the ML used should be based on the specific selection criteria and needs of the organization, which is very labor-intensive to specify. This makes it extremely challenging to near impossible to justify any one ML algorithm as better than another for selection.

Study 2: Proposing an Integrated ML Approach to Reduce Disagreement on Who to Hire

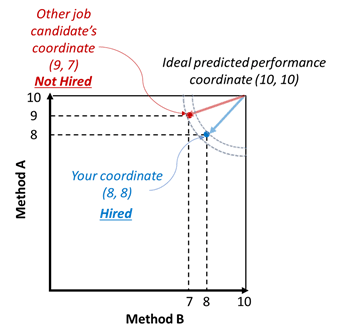

In part two of this study, we wanted to see if there was a way to reduce disagreement between single ML approaches. We proposed an integrated machine learning method for selection that used a distance-based approach to integrate the predicted future performance results of multiple algorithms for candidates. The top ten simulated candidates were hired if their integrated scores were closest to the ideal future performance score (for our study, this was based on cognitive ability, self-efficacy, work sample test, job knowledge, conscientiousness, emotional stability, and overall job performance). When comparing the integrated approach and a single ML approach, the integrated approach was superior with fewer cases of disagreement, or more consistency, when deciding who should be hired. This is promising and suggests that the integrated approach reduces bias and inconsistencies in ML-based hiring decisions compared to single ML approaches.

Study 3: Assessing Hiring Manager and Employee Perceptions of ML in Selection

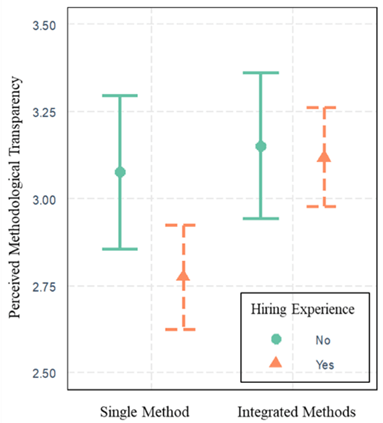

Part three of this study was presented at this year’s 2025 Society of Industrial and Organizational Psychology (SIOP) Annual Conference in Denver, Colorado. In part three, we investigated applicants’ and hiring managers’ perceptions of using single ML and integrated ML methods in the selection process to hire hypothetical candidates. The integrated approach had higher ratings of transparency and methodological integrity than the single ML approach, and these differences were greater for participants with hiring experience compared to participants without it.

Conclusion

ML and AI are at the forefront of the future of the way we work, but employment law and regulations, especially regarding selection, have not caught up to these quickly evolving technology tools. Applying ML within organizations, beyond controlled research with simulated scenarios, has many legal risks and ethical considerations that should be taken seriously. For selection, different ML methods may not agree on who to hire despite using the same applicant data, resulting in hiring decisions that may not be legally defensible at this time. Most organizations are hesitant to take on the legal risk of adopting ML methods for selection decisions before laws are passed. Research should focus on understanding the risks and ethical considerations when applying AI to HR decisions, which will be essential to inform future laws and regulations of AI in the workplace. ML is a powerful tool but should always be used to support, not replace, human expertise and judgment.

Poster Citation:

Lee, J., Voss, N. M., Stoffregen, S. A., Giordano, F. B., Warren, C., Chlevin-Thiele, C., & Klos, L. S. (2025, April 2-5). Integrated ML methods and integrity: Reactions to machine learning in selection [Poster Session]. Annual meeting of the Society for Industrial and Organizational Psychology, Denver, CO.

Chi-Leigh Warren joined FMP as an Intern in the summer of 2022. She is currently a student in Kansas State University’s I-O Psychology Ph.D. program. Her research focuses on intrapreneurship, and she is interested in investigating how entrepreneurial employee behaviors benefit organizations and if there are specific individual differences that make entrepreneurial individuals, entrepreneurial.